#OpenSourceDiscovery 97: LibreChat

Multimodal chat with local LLM and local data, batteries included

Today I discovered…

LibreChat

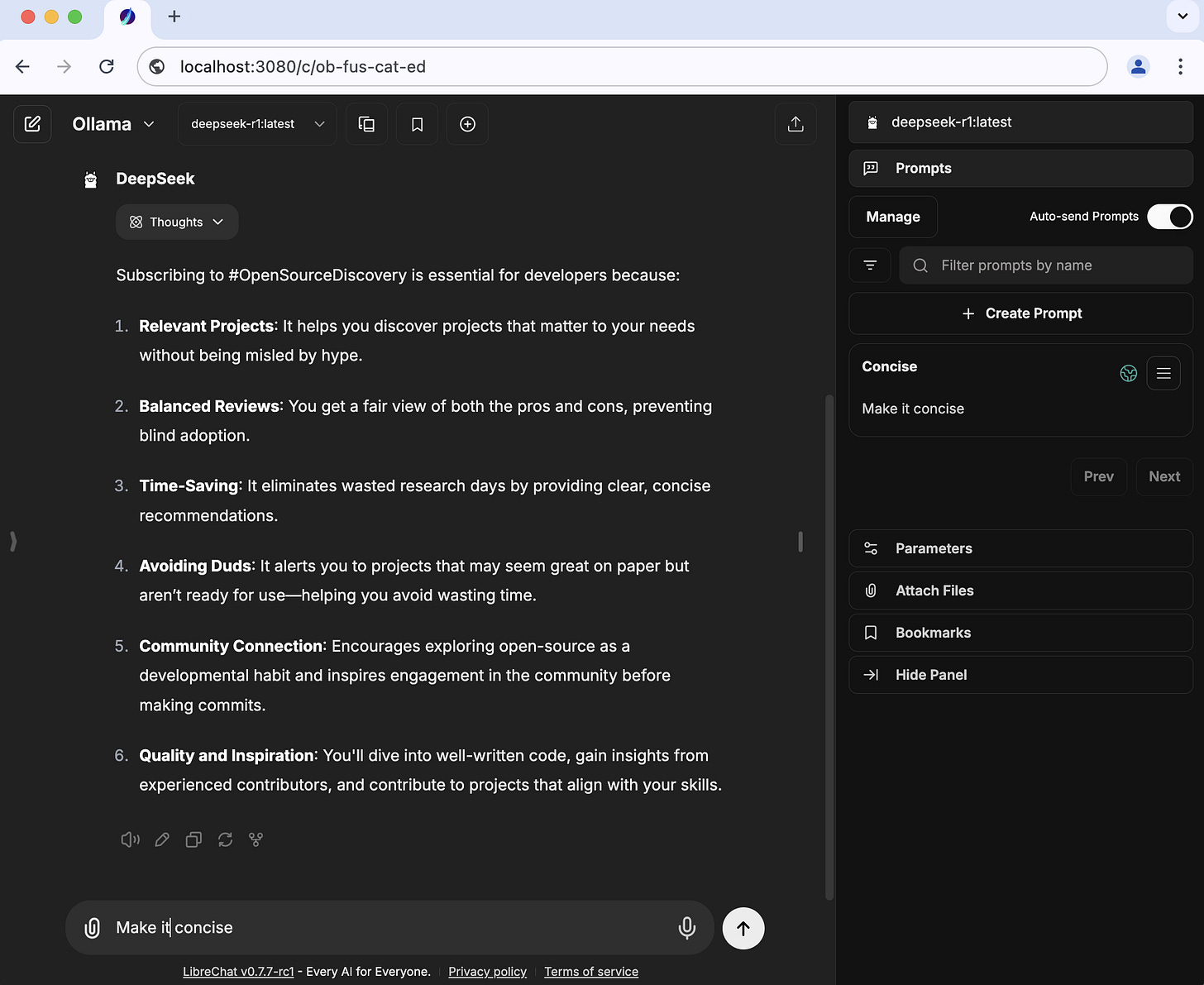

A web app to chat with AI models, it supports search, speech, multi-user auth, and context management features out of the box. And makes it easier to setup advanced features locally such as file upload, RAG, artifacts, prompt presets, agents (new), mcp (new), etc.

💖 What I like about LibreChat:

Experience at par with ChatGPT plus: I found it to be a reliable replacement of ChatGPT Plus, as it has all the features I use in ChatGPT Plus with similar UX. That led to zero learning curve, instantly replacing $20 cost to API usage based cost ($3 for my moderate usage) and then transitioning from paid/remote LLM usage to local LLM (wherever it is practical). I run local LLM API via Ollama.

Easy configuration and settings management: I liked how LibreChat manages configs for the setup as well as presets resulting in quick setup of advanced features and personalized UX. Easy model switching was one of the simple yet effective feature. For example, when I did not like the performance or quality of local LLMs for a particular task, I was able to quickly switch to specific OpenAI or Anthropic models and complete the task. It helped me with testing and comparing multiple local AI models performance and quality too.

Enabled private offline collaboration: I hosted it on my local network and made it available to all wifi users with their own separate account, and with some shared prompt templates. Resulting in cost saving from per user pricing of expensive on-cloud LLMs, collaboration privately/offline, and accessing powerful local LLM offline from cheap computers.

👎 What I dislike about LibreChat:

Agent Builder is unstable: Due to a bug, I did not succeed in building an agent. I do not expect LibreChat to actually support this feature though, that’d be a totally new product IMO.

MCP support is experimental: I could not test it either because of an issue. I am keen to try this one after few more releases down the line.

For local LLM Chat use case, there are 100s of Open Source projects to choose from. I tried 5-6 of them, and this is the product that I found myself sticking for the longest period of time. I like where this project is going, I hope they don’t mess this up and continue to focus on improving the UX/performance and not on merely feature additions.

⭐ Ratings and metrics

Based on my experience, I would rate this project as following

Production readiness: 8/10

Docs rating: 7/10

Time to POC(proof of concept): less than a day

Author: Danny Avila @lgtm_hbu, Marco Beretta @berry13000, Wentao Lyu, Fuegovic

Demo | Source

🛡 License: MIT

Tech Stack: Typescript, JavaScript, PythonNote: In my trials, I always build the project from the source code to make sure that I test what I see on GitHub. Not the docker build, not the hosted version.

If you discovered an interesting Open-Source project and want me to feature it in the newsletter, get in touch with me.

To support this newsletter and Open-Source authors, follow #OpenSourceDiscovery on LinkedIn and Twitter