#OpenSourceDiscovery 94: OpenVINO

Maximize AI performance on budget computers (Intel hardware)

Today I discovered…

OpenVINO

A software toolkit to optimise deep learning models and improve inference speed on CPU (x86, ARM), GPU (OpenCL capable, integrated and discrete) and AI accelerators (Intel NPU). Supports models trained with TensorFlow, PyTorch, ONNX, Keras, and PaddlePaddle. My interest was in optimizing ONNX model on CPU.

💖 What I like about OpenVINO:

I was able to make the voice model (whisper small multilingual model) inference 5-10% faster (making my existing Whisper.cpp setup little better)

Optimizing deep learning models for consumer-grade PCs makes offline AI accessible for everyone. With Intel hardware leading the budget personal computer market, OpenVINO proved to be a good addition to improve the response time of LLM (LlaMa 3.2 3B in my case) impacting daily life. For LLM optimisation testing, I missed to measure the impact of OpenVINO separately but overall a combination of these techniques led to huge 2-3x inference speed - Quantization/Pruning, ONNX and OpenVINO runtime optimisation, etc.

Note: All tests were performed on a budget computer (i5 x86 4-core CPU, 12GB RAM, Linux) and a relatively high-end computer (i7 x86 6-core CPU, 16GB RAM, macOS)

👎 What I dislike about OpenVINO:

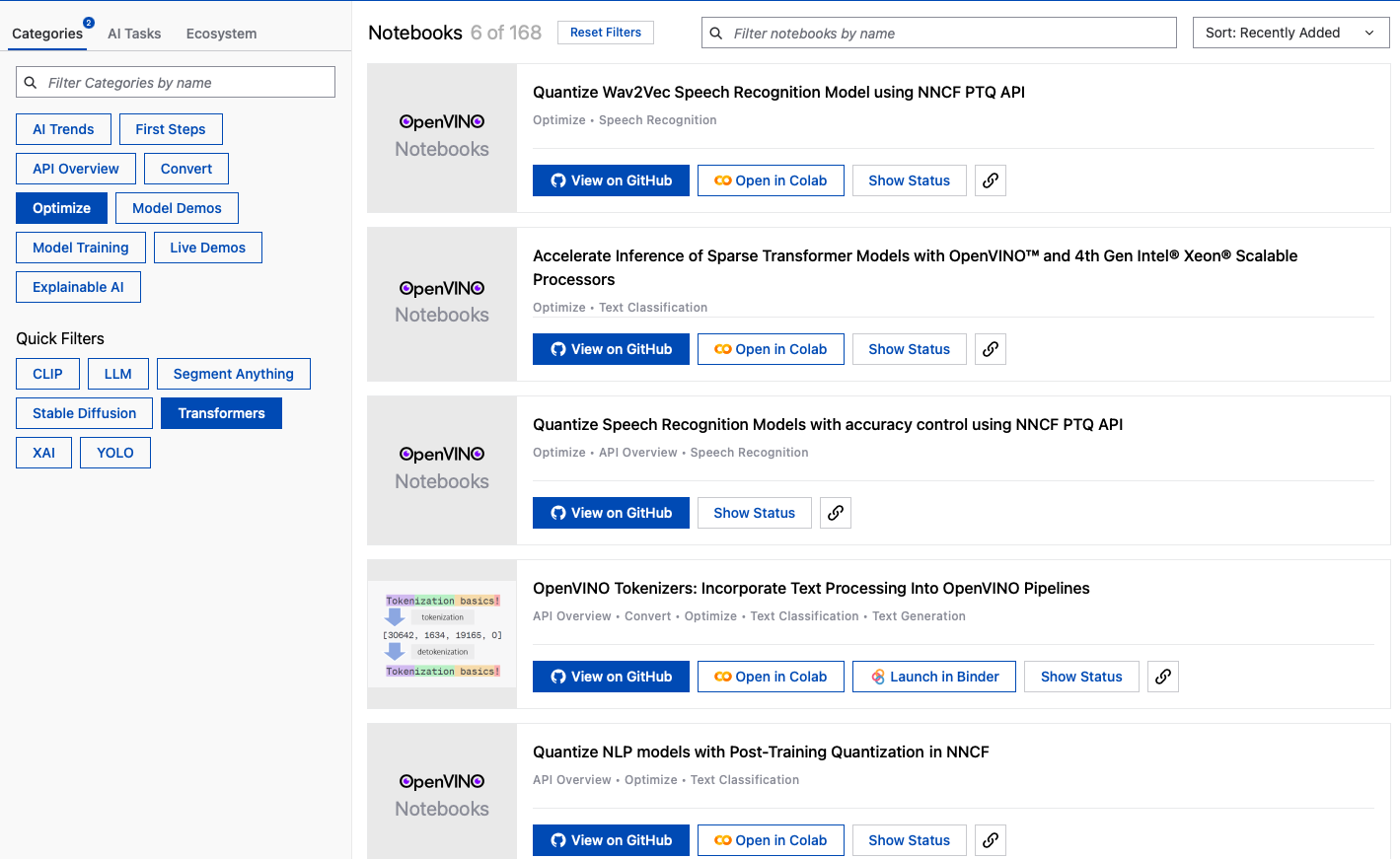

There’s a learning curve to understand the whole OpenVINO ecosystem to fully utilise it such that the inference speed could be boosted significantly

It took significant time to test OpenVINO. To make things easier, OpenVINO did have multiple tools and resources including genai flavour installation, readymade examples, hosted pre-optimised models on HuggingFace, etc. But no matter which path I chose for my trials, something was broken and it took time to either fix that or work around that e.g. an already converted whisper model hosted on OpenVINO HF account as referenced in their example was 404, macOS security warning for OpenVINO even when the unidentified software installation was enabled, a dependency was missing in the toolchain, etc.

Overall, from the functionality perspective, it does what it says. But it felt like extremely time-consuming process as a first-time user. On the surface, it checked all the boxes for good Developer Experience, but it didn’t seem to do so in reality for a first-time user. A major improvement is needed there. For now, just stick to OpenVINO base installation and ask for help when stuck.

⭐ Ratings and metrics

Based on my experience, I would rate this project as following

Production readiness: 9/10

Docs rating: 7/10

Time to POC(proof of concept): less than a month

Author: Intel team - Ilya Lavrenov, Roman Kazantsev, Maxim Vafin, Vladimir Paramuzov, Irina Efode, Sebastian Golebiewski, Karol Blaszczak, Pawel Raasz

Demo | Source

🛡 License: MIT

Tech Stack: C++, C, PythonIf you discovered an interesting Open-Source project and want me to feature it in the newsletter, get in touch with me.

To support this newsletter and Open-Source authors, follow #OpenSourceDiscovery on LinkedIn and Twitter

I'm bullish on figuring out offline local AI on budget hardware.

This is an interim review of OpenVINO based on the results I observed until now.

Given the complexity of the project and its dependency on many variables, I will need to come back and update the review in next couple of months as I gain more objective data points and experience to fairly evaluate this project.

Have you tried OpenVINO? Do share your experience and help make this review more useful to others.