#OpenSourceDiscovery 91: June

LLM-powered offline voice assistant

Today I discovered…

June

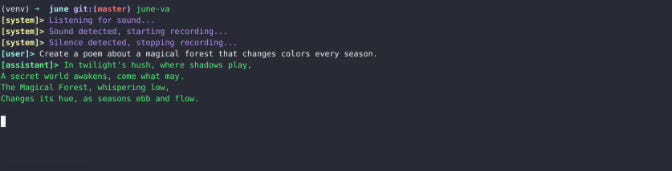

A Python CLI that works as a local voice assistant. Uses Ollama for LLM capabilities, Hugging Face Transformers for speech recognition, and Coqui TTS for text to speech synthesis.

💖 What I like about June:

Simple, focused, and organised code.

It doesn’t promise much but does what it promises with no major bumps i.e. takes the voice input, gets the answer from LLM, speak the answer out loud.

A perfect choice of models for each task - tts, stt, llm.

👎 What I dislike about June:

It never detected the silence in my closed soundproof office. Had to switch off mic, only then it would stop taking the voice command input and start processing.

It used 2.5GB RAM in addition to almost 5GB+ used by OLLAMA. On i5 processor with 12GB RAM, the experience was way too slow to be able to use for any practical purposes. From my past experiences, I know that Apple’s M1 or M2 processor could have produced a much better experience.

💡 What if

I might not use this project at the moment as an end user but it does inspire ideas either for new projects or just to take this project ahead

What if, it had a higher level of abstraction, where it also provided integration with other LLM-based projects such as open-interpreter for adding capabilities such as - executing the relevant bash command on my voice prompt “remove exif metadata of all the images in my pictures folder”. I could even wait for a long duration for this command to complete on my mid-range machine, giving a great experience even with the slow execution speed.

What if, it had the lower level of abstraction, where I could change the models easily, or use it as a package to add voice capabilities in my own LLM-based project with a neat API interface.

⭐ Ratings and metrics

Based on my experience, I would rate this project as following

Production readiness: 6/10

Docs rating: 7/10

Time to POC(proof of concept): less than a week

Author: Mezbaul Haque

Demo | Source

🛡 License: MIT

Tech Stack: Python, PyAudio, Ollama, Hugging Face Transformer, Coqui TTSIf you discovered an interesting Open-Source project and want me to feature it in the newsletter, get in touch with me.

To support this newsletter and Open-Source authors, follow #OpenSourceDiscovery on LinkedIn and Twitter

I forgot to mention one more good thing about the project - A perfect choice of models for each task - tts, stt, llm. If it were not those exact models, I wouldn't have even tried it.